Artificial intelligence has transformed our capacity to process vast information streams and identify emerging threat patterns. However, AI tools must complement, not replace, human judgment in critical intelligence assessment.

The AI Revolution in Intelligence

The volume of information relevant to global threat assessment has grown exponentially. Traditional human-centered analysis cannot process the thousands of news articles, government reports, scientific papers, and social media posts published daily across multiple languages and time zones. Artificial intelligence systems excel at this scale of data processing.

Modern AI systems can identify emerging patterns across vast datasets, detect anomalies in seemingly routine information flows, and maintain continuous monitoring that never requires sleep or breaks. These capabilities represent genuine advances in intelligence collection and preliminary analysis, enabling more comprehensive and timely threat assessment than purely human systems could achieve.

Human Oversight and Interpretation

Our editorial team maintains oversight of all AI-generated content, ensuring accuracy, context, and appropriate framing of complex global issues. Technology serves our mission, but human expertise guides our editorial direction.

AI systems excel at pattern recognition but often lack the contextual understanding essential for strategic intelligence assessment. A machine learning algorithm might detect increased military rhetoric between nations but cannot evaluate whether such language represents genuine escalation or domestic political positioning. Human analysts provide the cultural knowledge, historical perspective, and strategic insight necessary for accurate threat assessment.

Bias Detection and Mitigation

AI systems inherit biases from their training data and algorithmic design. News sources may exhibit regional, cultural, or political biases that influence AI-generated assessments. Our editorial process includes systematic bias detection and mitigation procedures to ensure balanced analysis.

We employ diverse human reviewers with different cultural backgrounds, analytical frameworks, and regional expertise to evaluate AI-generated content. This multi-perspective approach helps identify and correct biases that might otherwise influence threat assessments. Regular algorithm auditing and retraining further reduces systematic biases in our analytical processes.

Accuracy and Error Correction

AI systems can process information quickly but may misinterpret context, misidentify sources, or amplify errors in underlying data. Human editorial oversight provides essential accuracy checking and error correction that maintains the credibility our users require.

We maintain detailed logs of AI performance, tracking accuracy rates across different threat categories and analytical tasks. This data enables continuous improvement in AI system performance while providing transparency about the limitations of automated analysis. Users receive clear indicators when content has undergone different levels of human review and validation.

Ethical AI Implementation

The deployment of AI systems in intelligence analysis raises significant ethical questions about privacy, accountability, and the appropriate role of automated decision-making in security assessment. Our editorial standards prioritize ethical AI use that respects human rights and democratic values.

We implement privacy-preserving analysis techniques that focus on publicly available information rather than personal data collection. Our AI systems analyze aggregate patterns and trends without tracking individual behavior or communications. This approach enables effective threat assessment while maintaining respect for privacy rights and civil liberties.

The Future of Human-AI Collaboration

The most effective intelligence analysis combines AI capabilities with human expertise in a collaborative framework that leverages the strengths of both. AI systems provide comprehensive data processing and pattern recognition, while human analysts contribute contextual understanding, strategic assessment, and ethical oversight.

This human-AI collaboration model represents the future of intelligence analysis—not replacement of human judgment by artificial systems, but enhancement of human capabilities through intelligent automation. Our editorial commitment ensures this collaboration serves accuracy, transparency, and public accountability in all aspects of threat intelligence reporting.

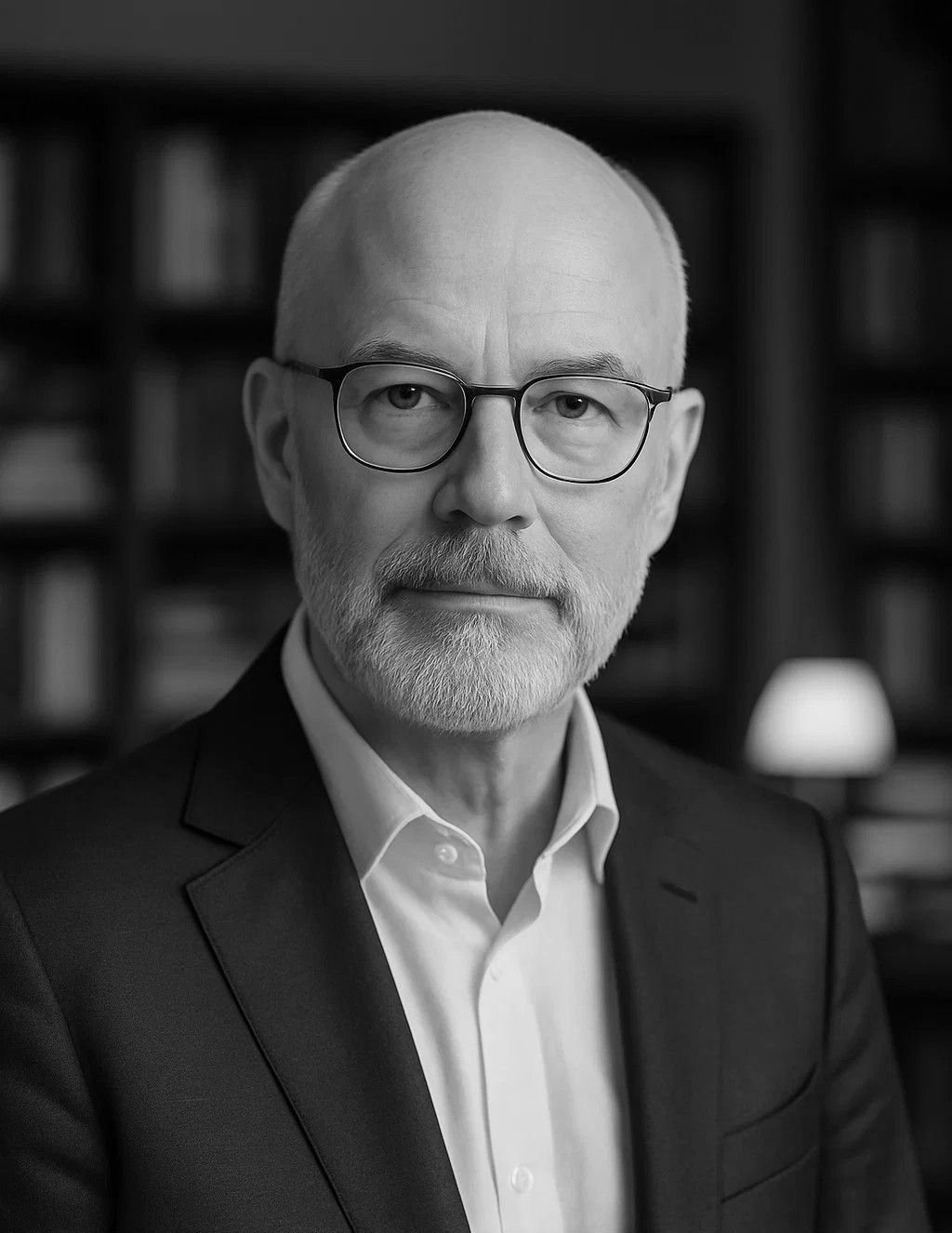

Alexander Ward

Editor-in-Chief

Alexander Ward provides editorial oversight and policy direction for Obxerver Earth's threat intelligence reporting. His expertise spans global security analysis, intelligence methodology, and responsible journalism in the digital age.